The best way to approach a problem is typically to look at it from different angles, to turn it over and to discuss it until a solution can be found. Similarly, it is important to try to bring different perspectives into your work to develop your skills and extend your toolbox. This article explores the parallels between software testing and science, and highlights what testers can learn from the scientific method.

What is the Scientific Method?

The ultimate goal of all sciences is knowledge, and to acquire new knowledge, scientists make observations and analyze data – activities we normally refer to as research. The scientific method is simply a collection of techniques that scientists use to investigate phenomena and gain new information. For a method to be considered scientific, it must be based on gathering empirical evidence. Empirical means acquired through observation or experimentation – making claims without experimental evidence is science fiction, not science.

Here, we can already draw our first parallel to testing. We test software to try to learn how it works; like a researcher, our goal is to gain new knowledge. If we already knew everything about the software, there would be no reason to test it! When we test software, we are in fact experimenting and observing the results. Testing is simply gathering empirical evidence. Hence, we can draw the logical conclusion that good testing adheres to the scientific method!

Simplified, the scientific method involves the following workflow:

1. Collect data through observation

2. Propose a hypothesis and make predictions based on that hypothesis

3. Run experiments to corroborate the hypothesis

If the experiments corroborate the hypothesis, additional predictions can then be made and tested. If the experiments instead refute the hypothesis, it is necessary to go back and propose a new hypothesis, given the additional knowledge gained from the experiment.

A trivial example would be:

1. We observe a mouse eating cheddar cheese.

2. Based on this observation, we propose the hypothesis that our mouse will eat all sorts of cheese and, in particular, we predict that our mouse will also eat Swiss cheese.

3. We give our mouse Swiss cheese and eagerly wait to see if the cheese will be eaten.

If the mouse eats the Swiss cheese, our hypothesis has been corroborated and we can predict other consequences, for example, that the mouse will also eat goat cheese. If the mouse does not eat the Swiss cheese, we have to go back and suggest a new hypothesis. Maybe the mouse only likes cheese without holes in it?

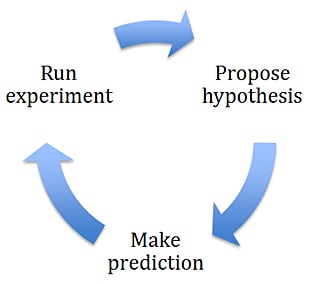

The scientific method is cyclic and dynamic; it involves continuous revision and improvement. Based on observations, a hypothesis is proposed and the consequences of that hypothesis are predicted. Experiments are setup and run to test the hypothesis, and the results are evaluated and used to propose a revised – and improved – hypothesis.

Figure 1: The scientific method. Based on observations, a hypothesis is proposed and a prediction is made. Experiments are setup and run to test the hypothesis, and the results are evaluated and used to propose a revised hypothesis and so on.

How does the scientific method apply to software testing? Let’s assume we are testing a simple text editor. The workflow of the scientific method maps to software testing as follows:

1. Learn the product and observe how it behaves

2. Identify risks and predict potential failures

3. Execute tests to reveal failures

Based on the results of our tests, we will identify other risks and use this new knowledge to design additional tests. The process of testing a software product has striking similarities with the workflow typically adopted by scientists. But does software testing enjoy the same level of credibility as science?

Credibility

The word science comes from the Latin scientia, meaning knowledge, and when we talk about science, we refer to a systematic search for, and organization of, knowledge. Typically, the word science is associated with authority, expertise and – last but certainly not least – credibility.

Whether we find something credible or not depends on:

1. What we know about the issue – evidence

2. How compatible something is to our own world view

3. Reliability of the source

4. What are the consequences of accepting or refuting the issue

We are often more likely to believe statements if we have little knowledge of the topic – we simply do not have any counter evidence. Some sources are also seen as more credible than others; if we read something in the morning paper, we are more likely to believe it than if it is posted on Facebook.

In testing, what we know about the issue equates to our test result. How compatible the behaviour of a piece of software is with our prior experiences has an impact on our expected test result, and therefore might make us biased. The reliability of the test result depends on who tested the product and how it was tested. Finally, there may be direct consequences of reporting or rejecting any particular bug, which could affect our objectivity.

Is the testing done at your workplace seen as credible, and who assesses that credibility? The first step in increasing test credibility is to look at the factors that will raise the likelihood that we will believe in both the process employed and the actual result obtained.

The Science of Testing

What characterizes science is that it aims at falsifiable claims, whereas pseudo-science, or non-science, typically makes claims that cannot be verified. Here, we can draw a second parallel between science and testing. Software testing that embraces the scientific method tries to find ways in which the software fails rather than trying to prove that the software works. How do we recognize the testing equivalent of pseudo-science? How can we protect ourselves, as testers, from being fooled by false claims? The best way is to nurture our inner scientist, and strive for an unbiased and reflective approach to testing.